The Death of the Dumb Pipe !

How to publish a small file from a FTP location to a JMS queue? This was the problem statement given to me when I got my first job. As any new graduate would do, I thought of writing a fascinating, rocket science program using some programming language to get this done.

My new boss understood my blind enthusiasm and diverted me towards evaluating an enterprise integration product. In 2010, Open Source was just starting to boom in every technology layer of an enterprise, till then the biggest open source hit was Linux, then Android. For many, Open source was a new and scary concept.

I was particularly focusing on Open Source Integration Products, it was a new product category at that time. Some of today's popular enterprise integration vendors were laying their product and community foundation. Starting from Apache Camel with contributions from FuseSource(then acquired by RedHat) & Talend, MuleSoft, WSO2, etc.

I played with pretty much all of these products and more, further I spent multiple years focusing on each of these products as I built my career around Enterprise Integration.

Integration Vs Custom Programming

BTW, I was able to solve the FTP -> JMS integration problem by writing a few lines of configurations (not program, just configurations), It was super easy. But I was living in guilt, spending 4 years in engineering and writing a two line Apache Camel code to move the file from FTP to JMS. You know what I mean ! (Just google my name along with any of these products, you will see a lot of related questions to the community, Ignore all things naive, I was too young come on)

Why Enterprise Integration products were needed ?

- The number of applications were growing: Yes, enterprises built a lot of applications, as their business grew. All these applications needed to talk with each other: Yes, that's how businesses were able to bring more value to customers.

- Developers were writing too much code. Yes, especially a lot of Java code with a lot of classes, with bugs, leading to performance issues and longer development cycles. (Yes, you can write 10 classes + 50 methods + completely unreadable code for the same FTP -> JMS use case. )

- Too many point to point integrations, Developers built on-demand direct integrations between Applications. This led architects losing context on what is being built and what is connected to what.

- Too many integration points = too many protocols to deal with. Concept of adapters/connectors were treated like rocket science those days and It was super costly to build one yourself.

Birth of Enterprise Integration Products

I should actually call it - ‘More awareness in Enterprises to use Integration Products’. Yes, this happened in 2010.

Multiple communities and product vendors came up with products under the category of ‘Enterprise Integration’, converging a lot of ideologies like SOA, ESB’s, Middleware, MQ’s, Web Services, API’s, etc.

This actually improved the overall enterprise architecture in a lot of ways:

- Developer productivity(Rise of Integration Developers) - You can talk to any popular protocol in a matter of minutes, as long as you have the right privileges to talk to that system. Be it JMS queues, Database's,

SOAP/REST APIs, SaaS platforms. Further developers were able to build integration pipelines in a matter of days, using UI based drag and drop tools.

Developer productivity - UI based designer

- Improved architectural governance - It helped architects to visualise and track the number of integrations across enterprise, which led to efficient management of schemas, data flows, etc.

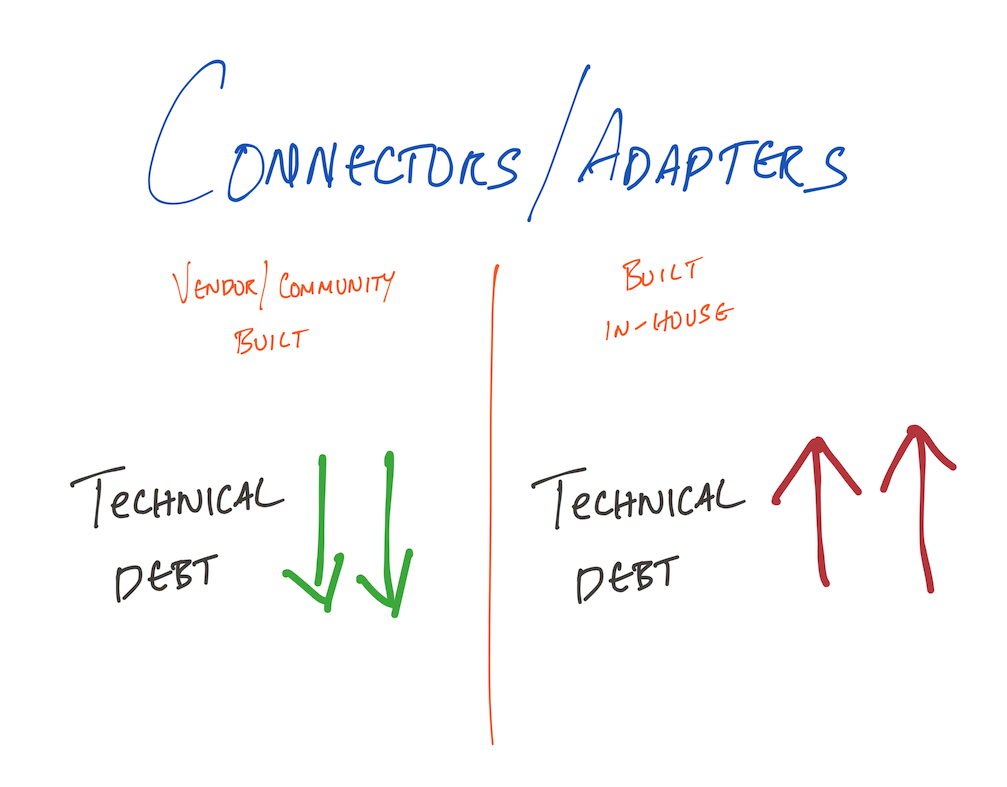

- Less technical debts - Enterprise developers didn't have to build a lot of boilerplate code. Eg: connector implementations were taken care by the vendors/community.

Technical Debts - Integration Vs Custom programming

- Faster development cycle - It made possible for the enterprises to connect numerous applications easily

- More connected applications - Yes, obviously this made integration a no brainer. More applications got connected contributing to better business outcomes.

Enterprise Integration can't scale business demands anymore

“Nothing lasts forever"

Today, The scope of Enterprise Integration technologies has shrunk in the end to end solution architecture. Integration Middleware worked, there is no flaw in the way it got evolved over the past decade. Particularly, Integration products streamlined the overall enterprise architecture in a lot of ways, unfortunately it was over architected without addressing the fundamental flaws in the underlying technologies/platforms.

Credits - https://xkcd.com/2347/

Enterprise Integration is a smart workaround to mitigate some of the technology immaturities around data storage, workarounds are meant for the short term and it doesn't last long.

Lets see why it was a workaround:

Building dumb integration pipes: This was a rule of thumb given to any integration developer - ”Keep the integration pipelines as dumb as possible = No business rules = No complex transformations” This was etched on every integration developer, every pipeline was built on top of this fundamental philosophy. Why ?

- In-memory limitations - All these integration platforms were built using traditional runtimes, Eg: Tomcat. These runtimes depend on the limited heap space provided within a VM/Machine. When you are processing 10 TPS(Transactions per second) on a 8 Gig machine, You don't have room for doing any computation on the data, the faster you send the data out, it's good for the runtime. If you add any business logic, Eg: any simple aggregate function (SUM, COUNT, etc), your runtime will start accumulating messages and it will blow into pieces.

Memory constraints

- Store First, Process Next - This is a golden philosophy in enterprise architecture isn't it, more than a philosophy its a habit. If you want to build a website, first design database tables, if you want to build a integration pipe, first figure out where you will store data, if you want to process data, first store it somewhere. In order to process data, we have to accumulate data first ! This is not just a habit, we got into this habit because of the previous limitation - We only relied on in-memory(RAM) capacity to handle data, we were able to build too many integrations, but we were never able to store the data in the pipe itself. Obviously you cant permanently store data in memory. All those million dollar pipes are dumb, you still need another multi-tier architecture to store your data.

Loosing real-time advantage

This really means, “Building dumb integration pipelines” or “Integration Pipelines without business rules” was just an approach to mitigate the underlying technology limitations. We clinged too much into building dumb pipes instead of trying to fix the under the hood infrastructure limitations.

“Q: Hey come on, we deploy Integration Pipelines using Kubernetes, We can handle a lot of traffic."

"A: As the integration market matured, we also improved technologies around scalability. Kubernetes is one great example, It is still a great technology for a lot of use cases and it has a promising future. But for Enterprise Integration, Kubernetes is a horse blinker - Integration pipelines were predominantly stateless services, we were able to scale integration pipelines to handle more traffic, but we were never able to efficiently process data on the fly. (I am not saying Kubernetes is bad for stateful services, Enterprise Integration products narrowed too much into the philosophy of building dumb pipes, isolating themselves away from data processing patterns)”

Flaws in Enterprise Integration

Lets see why Enterprise Integration cannot cope with business demands going forward

- Value is in the pipeline: As more and more connected applications you have, you can't afford to use ‘data at rest’ approach to generate business value. With more applications connected and fast moving data, the opportunity window to process data is getting narrower. Which means, businesses cannot wait for the data to be accumulated first, in order to process them. Basically, businesses needs smarter pipes not the dumb ones. Smarter here refers to the ability to store and process data on the fly.

Dumb pipes

- Integration is tightly Coupled: When you build integration pipelines, you tightly couple your mapping transformations with source/destination protocol. This means, you can't scale above a threshold Eg: You may be able to scale your integration pipeline using 100 pods in Kubernetes, but if your destination REST API can't handle your volume, you are going to kill them.

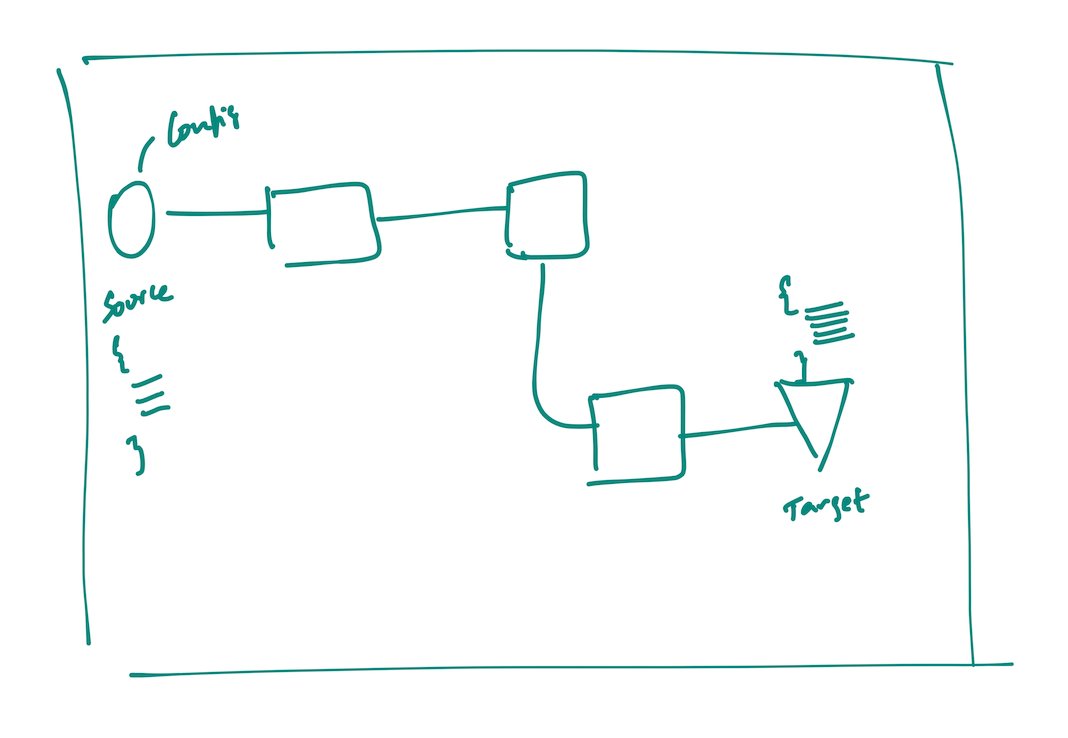

Typical integration pipeline

- Point to Point integration problems still exist: The whole promise of integration products was to avoid point to point integrationa, instead integration products created an envelope on top direct integrations. If we take a step back and look at all the integration flows we built, Its déjà vu: "the same point to point integrations inside a costly wrapper"

Point to point integrations stay as is

- Incomplete Event Driven Architecture: Of course Integration was built on event driven principles, for eg: JMS queues, we built nice event driven pipelines using queues, but in the end didn't we forcefully kill those events by passively storing them somewhere ? (We will talk about batch processing in a separate blog)

In summary, Middlewares/Integration products became quite critical in enterprises. Unfortunately, these products did not evolve as better as Enterprises evolved. Businesses are looking into data that is active, data that is on the move, to process a business event as and when it happens, generate value to a customer as fast as they can, but Integration products can't cope with this evolution.

Will Enterprise Integration be wiped out ?

Absolutely not. But their scope is reducing. Enterprise Integration products create a network of applications within enterprises, but an application network is an overkill when you cannot process the data within the network.

Enterprise Service Bus(ESB) vs Event Stream Processing(ESP)

The only useful pattern that we all learnt from an integration product, is building reusable adapters/connectors. There will always be a diverse number of protocols, there will always be the need for protocol bridging/translations but there will not be a need for integration pipelines. Dumb pipes will diminish, “Processing data while its on the move” is what is helping enterprises today and dominate enterprise architecture in the future.

Event Streaming - The way forward

As the number of applications grow, they will generate more and more meaningful events, events around your customers, partners, business transactions, etc.

If you look at events as they are, they have life in them. They are generated at a particular time, based on a particular action.

If you build dumb pipes, they may survive for few seconds after they are born, but they will die as soon as it reaches the destination(storage)

All these streams of events are meant to be captured as it is, kept alive as they are, and process them whenever you need to, without flattening them to death.

To do this, you need a platform that can move data but also has the ability to process them as they are moving.

This is not as simple as building another messaging product, it is a platform that converges traditional storage capabilities, messaging architecture, data processing patterns, distributed systems into one.

Apache Kafka is one technology that has evolved into an Event Streaming Platform to address these gaps. It is evolving to be the foundation of any enterprise, it doesn't matter whether you are from enterprise integration, data integration, data analytics, infrastructure or security, Apache Kafka is a foundation that you can't skip.

By the way, have you missed some of the recent updates on Kafka, Its time to refresh yourself:

- Apache Kafka is NOT just a Data Ingestion for Data Lakes, it's meant only for data analytics people.

- Apache Kafka is NOT just another message broker, replacing the traditional message brokers like RabbitMQ, ActiveMQ, HornetMQ, Tibco EMS, Solace, IBM MQ, etc.

Apache Kafka today

Before jumping into the technology, let's see how Event Streaming overcomes some of the drawbacks we discussed in Enterprise Integration(But I have to use Apache Kafka for examples, there is no other alternative to Apache Kafka when it comes to Event Streaming Platform)

-

Point to point integration is a real anti-pattern: It is almost impossible to create point to point integrations with Event Streaming platforms. The data producer/connectors, data transformation layer, data consumers/connectors are absolutely decoupled between one another. That means, Streams from Producer A is not intended for any particular consumer ! Any one can consume this stream, also any number of consumer can consumer this stream.

-

Persistence out of the box - All of your data flows comes with persistence built in, this enables event democratization, basically if a event is made available in a Kafka topic, it is available for N number of consumers, allowing each consumer to process the event at their own pace.

Persistence in Event Streaming

-

No more dumb pipes: There is no limitation when it comes to data processing, be it simple message to message mapping, applying aggregate function on a bunch of fast moving data, or joining multiple streams at once to co-relate data, all of that is supported out of the box.

-

No more multi-tier architecture for data lookups - If you want to enrich a particular event, you don't have to call a Web service, that further calls a cache and then it is supported by a database. Nope, In event streaming lookups are as simple as a SQL join. For eg: You can have N numbers of event streams in Apache Kafka, each of them can travel at different speeds.

Stream A: Fast shopping cart events Stream B: Slow/static Customer profile data. If you want to enrich Stream A with customer address, you don't have to build a N tier web services layer for it, it's a simple join from Stream A to Stream B, Lookup is done ! Imagine this, when you have more and more events onboarded to Kafka, the possibilities are endless. A lot of streams can be co-related to generate value, as compared to building numerous dumb pipes without any immediate value for the business.

Lookups - ESB vs ESP

- Be time sensitive - Event Streaming supports timing windows out of the box, Eg: If you are a bank who is looking to protect your customers from fraudulent transactions, you can create a real-time window on the fly and apply an aggregate function. Count of similar transactions from the same card over the past 30 seconds - You can't afford to store all of these transactions in from a dumb pipe to a database to predict fraud, as you would lose time. Your data pipelines are naturally smarter.

Event Streaming Processing - ksqlDB

- Simpler data governance - When you bring all of your enterprise events together, you have a lot more visibility into how your data is evolving. As opposed to, exporting the data from dumb pipes to a data lake, then running batch process to anlyse the data. Event streaming gives you immediate visibility to how your data is evolving through multiple hops, to ensure privacy and meet regulatory requirements.

In summary,

Enterprise Integration served a purpose, It simplified how applications communicate. As they dont have the ability to process events on the fly, events are being forced into passive data stores unfortunately.

Also when you build application networks with dumb integration pipes, the opportunity to process/react to those events are lost permanently.

Events Stream Processing is the prime focus for enterprises today, it allows them to watch out for imporant events and reach to them as and when they occur, be it simple data processing or complex event processing.

Also, most importantly, don't try to recreate enterprise integration patterns into Event Streaming platforms as it is. They can't be compared like to like. Event Streaming is a very different paradigm to Enterprise Integration.

If you are an Integration Architect or a developer, and have spent a lot of time integrating applications, It's time to get started on Event Streaming. Retain your integration experience, they are golden, Unlearn the Traditional Integration technologies you know, Learn Event Streaming paradigm from bottom up, generate value out of your events before they become too old !

Episode 2 is coming, watch out this space.

Some useful references:

Event Stream Processing Cookbook

Apache Kafka vs. Integration Middleware (MQ, ETL, ESB) — Friends, Enemies or Frenemies?